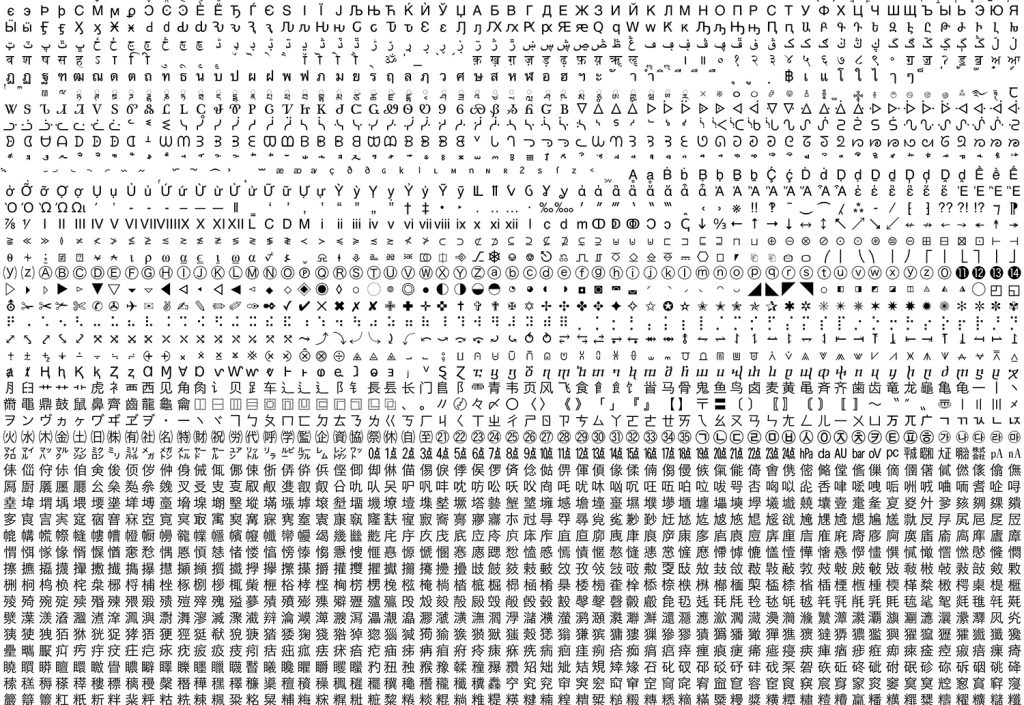

There was really only one problem: which ASCII? Far larger than for ASCII! ASCII? Which ASCII?įor a long time the 256 character table worked well. This would make for massive lookup tables. To further complicate things, some encodings would use 16 bits rather than 8. Sometimes people weren’t using the same encoding and they’d see something like this as an email subject line: �����[ Éf����Õì ÔǵÇ���¢!! The internet broke all of this because people started sending documents encoded in their native encoding to other people. And this mostly worked OK when documents weren’t shared with other computers. Character encodings broke the internetĪlright, so now we kind of know what’s up with all those bajillion character encodings you may have encountered like Microsoft’s Windows-1252 and Big5 – people needed to represent their own language and unique set of characters. But out of necessity they were using the additional 128 characters very differently, so differently that accidently selecting the wrong table could make a text unreadable. We should probably be thankful that they all at least shared the first 128 characters. Unfortunately that’s not enough to cover the wide variety of characters used in languages throughout the world, so people created their own encodings.Īt the end of the 90s, there were at least 60 standardized (and a few less so) extended ASCII tables to keep track of. In actual fact each character only requires 7 bits, so there’s a whole bit left over! This led to the creation of the extended ASCII table which has 128 more fancy things like Ç and Æ as well as other characters. There are actually only 95 alphanumeric characters, which sounds fine if you speak English. The ASCII table has 128 standard characters (both upper and lower case a-z and 0-9).

ASCII was created to help with this and is essentially a lookup table of bytes to characters. But writing in binary is hard work, and uh, would suck if you had to do it all the time. Zentgraf has a great example about how this works on his blog: 01100010 01101001 01110100 01110011Īll those 1s and 0s are binary, and they represent each character beneath. In those days memory usage was a big deal since, you know, computers had so little. Back in the day when Unix was getting invented, characters were represented with 8 bits (1 byte) of memory. ASCII encodingīefore we get into Unicode we need to do a little bit of history (my 4 year history degree finally getting use 🎉). To answer the question “What is unicode?” we should first take a look at the past. You might not realize it, but you’re already working with Unicode if you’re working with WordPress! So let’s see what it is and why it matters to developers. Now we figured it is about time to revisit our old friend Unicode and see why it’s important in today’s emoji filled world 🦄💩. So two years ago we published the first version of this blog post about Unicode. I remember reading that article (and have since forgotten most of it) but it really struck me how important character sets and Unicode are.

Waaay back in 2003 Joel Spolsky wrote about Unicode and why every developer should understand what it is and why it’s important.

0 kommentar(er)

0 kommentar(er)